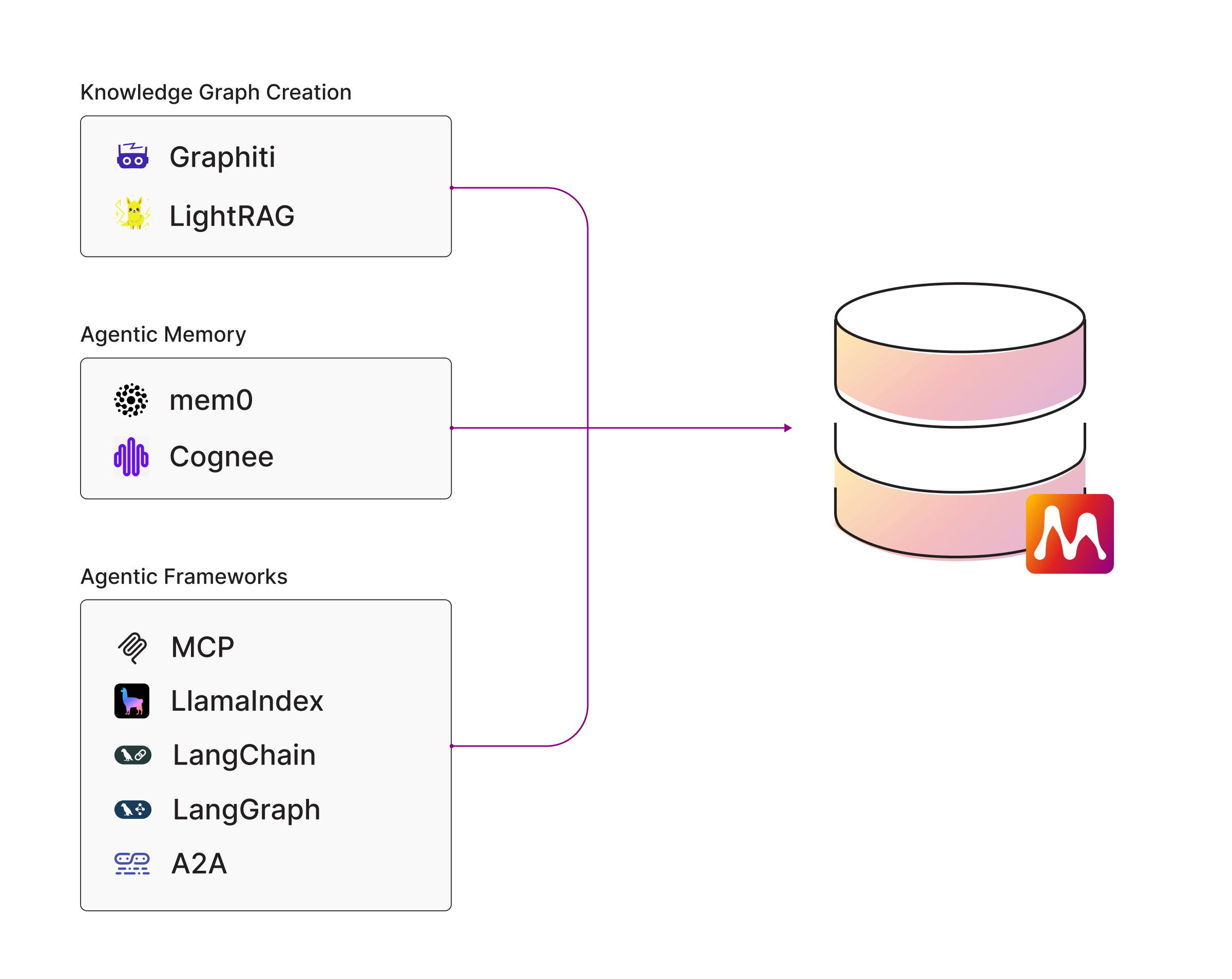

Integrations

Memgraph offers several integrations with popular AI frameworks to help you customize and build your own GenAI application from scratch. Here is a list of Memgraph’s officially supported integrations:

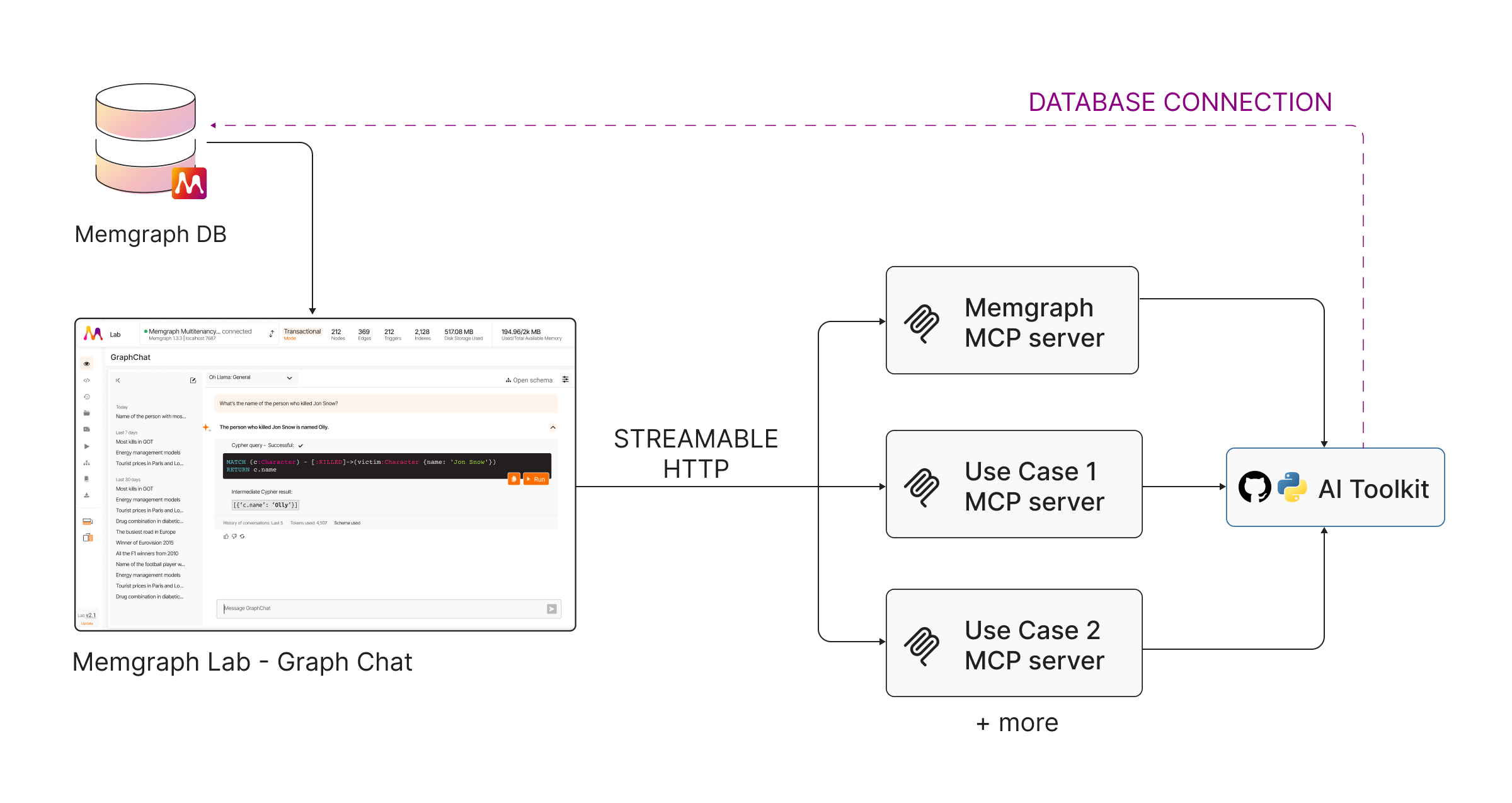

Model Context Protocol (MCP)

Memgraph offers Memgraph the Memgraph MCP Server

- a lightweight server implementation of the Model Context Protocol (MCP) designed to connect Memgraph with LLMs.

Run Memgraph MCP server

- Install

uv - Install Claude for Desktop.

- Add the Memgraph server to Claude config:

Open the config file in your favorite text editor. The location of the config file depends on your operating system:

MacOS/Linux

~/Library/Application\ Support/Claude/claude_desktop_config.jsonWindows

$env:AppData\Claude\claude_desktop_config.jsonAdd the following config:

{

"mcpServers": {

"mpc-memgraph": {

"command": "uv",

"args": [

"run",

"--with",

"mcp-memgraph",

"--python",

"3.13",

"mcp-memgraph"

]

}

}

}You may need to put the full path to the uv executable in

the command field. You can get this by running which uv on MacOS/Linux or

where uv on Windows. Make sure you pass in the absolute path to your server.

Chat with the database

-

Run Memgraph MAGE:

docker run -p 7687:7687 memgraph/memgraph-mage --schema-info-enabled=TrueThe

--schema-info-enabledconfiguration setting is set toTrueto allow LLM to runSHOW SCHEMA INFOquery. -

Open Claude Desktop and see the Memgraph tools and resources listed. Try it out! (You can load dummy data from Memgraph Lab Datasets)

Run Memgraph MCP server with Docker

Build the Docker image

To build the Docker image using your local memgraph-toolbox code, run from the

root of the monorepo:

cd /path/to/ai-toolkit

docker build -f integrations/mcp-memgraph/Dockerfile -t mcp-memgraph:latest .This will install your local memgraph-toolbox into the image.

Run the Docker image

1. Streamable HTTP mode (recommended)

To expose the MCP HTTP server locally:

docker run --rm mcp-memgraph:latestThe server will be available at http://localhost:8000/mcp/

2. Stdio mode (for integration with MCP stdio clients)

Configure your MCP host to run the docker command and utilize stdio:

docker run --rm -i -e MCP_TRANSPORT=stdio mcp-memgraph:latestBy default, the server will connect to a Memgraph instance running on

localhost docker network bolt://host.docker.internal:7687. If you have a

Memgraph instance running on a different host or port, you can specify it

using environment variables.

3. Custom Memgraph connection (external instance, no host network)

To avoid using host networking, or to connect to an external Memgraph instance:

docker run --rm \

-p 8000:8000 \

-e MEMGRAPH_URL=bolt://memgraph:7687 \

-e MEMGRAPH_USER=myuser \

-e MEMGRAPH_PASSWORD=password \

mcp-memgraph:latestConnect from developer tools

Visual Studio Code (HTTP MCP extension)

If you are using VS Code MCP extension or similar, your configuration for an HTTP server would look like:

{

"servers": {

"mcp-memgraph-http": {

"url": "http://localhost:8000/mcp/"

}

}

}The URL must end in /mcp/

Visual Studio Code (stdio using Docker)

You can also run the server using stdio for integration with MCP stdio clients:

- Open Visual Studio Code, open Command Palette (Ctrl+Shift+P or Cmd+Shift+P on

Mac), and select

MCP: Add server.... - Choose

Command (stdio) - Enter

dockeras the command to run. - For Server ID enter

mcp-memgraph. - Choose “User” (adds to user-space

settings.json) or “Workspace” (adds to.vscode/mcp.json).

When the settings open, enhance the args as follows:

{

"servers": {

"mcp-memgraph": {

"type": "stdio",

"command": "docker",

"args": [

"run",

"--rm",

"-i",

"-e", "MCP_TRANSPORT=stdio",

"mcp-memgraph:latest"

]

}

}

}To connect to a remote Memgraph instance with authentication, add environment

variables to the args list:

{

"servers": {

"mcp-memgraph": {

"type": "stdio",

"command": "docker",

"args": [

"run",

"--rm",

"-i",

"-e", "MCP_TRANSPORT=stdio",

"-e", "MEMGRAPH_URL=bolt://memgraph:7687",

"-e", "MEMGRAPH_USER=myuser",

"-e", "MEMGRAPH_PASSWORD=mypassword",

"mcp-memgraph:latest"

]

}

}

}Then open GitHub Copilot in Agent mode, and interact with Memgraph using natural language.

Resources

- Introducing the Memgraph MCP Server: A blog post on how to run Memgraph MCP Server and what are the future plans.

MCP server in Lab

Memgraph Lab has become an MCP Client and now has built-in support for MCP servers, including the Memgraph MCP Server. This means you can build your agentic applications powered by Memgraph MCP, Toolkit and Lab.

More details about the MCP connection in Memgraph Lab can be found in the Memgraph Lab documentation.

LlamaIndex

LlamaIndex is a simple, flexible data framework for connecting custom data sources to large language models. Currently, Memgraph’s integration supports creating a knowledge graph from unstructured data and querying with natural language. You can follow the example on LlamaIndex docs or go through quick start below.

Installation

To install LlamaIndex and Memgraph graph store, run:

pip install llama-index llama-index-graph-stores-memgraphEnvironment setup

Before you get started, make sure you have Memgraph running in the background.

To use Memgraph as the underlying graph store for LlamaIndex, define your graph store by providing the credentials used for your database:

from llama_index.graph_stores.memgraph import MemgraphPropertyGraphStore

username = "" # Enter your Memgraph username (default "")

password = "" # Enter your Memgraph password (default "")

url = "" # Specify the connection URL, e.g., 'bolt://localhost:7687'

graph_store = MemgraphPropertyGraphStore(

username=username,

password=password,

url=url,

)Additionally, a working OpenAI key is required:

import os

os.environ["OPENAI_API_KEY"] = "<YOUR_API_KEY>" # Replace with your OpenAI API keyDataset

For the dataset, we’ll use a text about Charles Darwin stored in the

/data/charles_darwin/charles.txt file:

Charles Robert Darwin was an English naturalist, geologist, and biologist,

widely known for his contributions to evolutionary biology. His proposition that

all species of life have descended from a common ancestor is now generally

accepted and considered a fundamental scientific concept. In a joint publication

with Alfred Russel Wallace, he introduced his scientific theory that this

branching pattern of evolution resulted from a process he called natural

selection, in which the struggle for existence has a similar effect to the

artificial selection involved in selective breeding. Darwin has been described

as one of the most influential figures in human history and was honoured by

burial in Westminster Abbey.from llama_index.core import SimpleDirectoryReader

documents = SimpleDirectoryReader("./data/charles_darwin/").load_data()The data is now loaded in the documents variable which we’ll pass as an argument in the next step of index creation and graph construction.

Graph construction

LlamaIndex provides multiple graph constructors. In this example, we’ll use the SchemaLLMPathExtractor, which allows to both predefine the schema or use the one LLM provides without explicitly defining entities.

from llama_index.core import PropertyGraphIndex

from llama_index.embeddings.openai import OpenAIEmbedding

from llama_index.llms.openai import OpenAI

from llama_index.core.indices.property_graph import SchemaLLMPathExtractor

index = PropertyGraphIndex.from_documents(

documents,

embed_model=OpenAIEmbedding(model_name="text-embedding-ada-002"),

kg_extractors=[

SchemaLLMPathExtractor(

llm=OpenAI(model="gpt-4", temperature=0.0),

)

],

property_graph_store=graph_store,

show_progress=True,

)In the below image, you can see how the text was transformed into a knowledge graph and stored into Memgraph.

Querying

Labeled property graphs can be queried in several ways to retrieve nodes and paths and in LlamaIndex, several node retrieval methods at once can be combined.

If no sub-retrievers are provided, the defaults are LLMSynonymRetriever and VectorContextRetriever, if supported.

From the latest update, LlamaIndex utilizes Memgraph’s vector search feature in the background to enhance retrieval. This integration enables faster and more accurate querying by leveraging vector similarity searches for embeddings stored in the graph, leading to precise and context-aware answers.

query_engine = index.as_query_engine(include_text=True)

response = query_engine.query("Who did Charles Robert Darwin collaborate with?")

print(str(response))In the image below, you can see what’s happening under the hood to get the answer.

Demos

If you’d like to take it one step further, explore how Memgraph and LlamaIndex work together in real-world applications with these interactive demos:

- Single-agent GraphRAG system: Learn how to build an agent-powered graph retrieval-augmented generation (RAG) system using Memgraph and LlamaIndex.

- Multi-agent GraphRAG System: Dive into a more advanced setup with multiple agents collaborating in a GraphRAG system.

LangChain

LangChain is a framework for developing applications powered by large language models (LLMs).

Memgraph has an integration with LangChain which supports Memgraph toolkit for building agentic applications, knowledge graph construction from unstructured data and MemgraphQAChain for querying via natural language.

Recently, we migrated the Memgraph LangChain integration to the repository under Memgraph organization for easier management.

Memgraph toolkit

The LangGraph framework enables users to build agentic applications. Memgraph now offers a toolkit for building agents that can autonomously interact with the Memgraph database.

Currently, the Memgraph toolkit supports the following tools:

- RunQueryTool: Executes Cypher queries on the Memgraph database.

- RunShowIndexInfoTool: Retrieves information about the indexes in the database.

- RunShowSchemaInfoTool: Retrieves information about the schema in the database.

- RunShowConfigTool: Retrieves information about the configuration of the database.

- RunShowStorageInfoTool: Retrieves information about the storage engine of the database.

- RunShowTriggersTool: Retrieves information about the triggers in the database.

- RunShowConstraintInfoTool: Retrieves information about the constraints in the database.

- RunBetweennessCentralityTool: Calculates the betweenness centrality of nodes in the graph.

- RunPageRankMemgraphTool: Calculates the PageRank of nodes in the graph.

We just started building Memgraph toolkit. In case you’re interested into having more tools, please open an issue on our repository or open a pull request and contribute.

Installation

Before starting to write code, make sure you have installed the required packages in your environment:

pip install langchain langchain-openai langchain-memgraph langgraphDon’t forget to install langgraph, as it is a prerequisite to use Memgraph

toolkit.

Environment setup

Make sure you have Memgraph running in the background.

After that, you can instantiate Memgraph in your Python code. This object holds the

connection to the running Memgraph instance.

import os

from getpass import getpass

import pytest

from dotenv import load_dotenv

from langchain.chat_models import init_chat_model

from langgraph.prebuilt import create_react_agent

from langchain_memgraph import MemgraphToolkit

from memgraph_toolbox.api.memgraph import Memgraph

"""Setup Memgraph connection fixture."""

url = os.getenv("MEMGRAPH_URI", "bolt://localhost:7687")

username = os.getenv("MEMGRAPH_USERNAME", "")

password = os.getenv("MEMGRAPH_PASSWORD", "")

graph = MemgraphLangChain(

url=url, username=username, password=password, refresh_schema=False

)

"""Set up Memgraph agent with React pattern."""

if not os.environ.get("OPENAI_API_KEY"):

os.environ["OPENAI_API_KEY"] = getpass("Enter API key for OpenAI: ")

llm = init_chat_model("gpt-4o-mini", model_provider="openai")

db = Memgraph(url=url, username=username, password=password)

toolkit = MemgraphToolkit(db=db, llm=llm)

The refresh_schema is initially set to False because there is still no data

in the database, and we want to avoid unnecessary database calls. The code above

also initializes the LLM chat model from OpenAI and gets the toolkit for Memgraph.

Agent setup:

After setting up Memgraph and the toolkit, you can create an agent that will use the toolkit to solve particular problem:

agent_executor = create_react_agent(

llm,

toolkit.get_tools(),

prompt="You will get a cypher query, try to execute it on the Memgraph database.",

)This is a simple example of an agent using a tool which executes Cypher query.

Running agent

Now, we can create a node in the database and run the agent:

query = """

CREATE (c:Character {name: 'Jon Snow', house: 'Stark', title: 'King in the North'})

"""

memgraph_connection.query(query)

memgraph_connection.refresh_schema()

example_query = "MATCH (n) WHERE n.name = 'Jon Snow' RETURN n"

events = memgraph_agent.stream(

{"messages": [("user", example_query)]},

stream_mode="values",

)

last_event = None

for event in events:

last_event = event

event["messages"][-1].pretty_print()

print (last_event)

The agent will autonomously pick a tool and use the toolkit to solve the requested problem.

Querying unstructured data

You can follow the example on LangChain docs or go through quick start below.

Recently, we migrated the Memgraph LangChain integration to the repository under Memgraph organization for easier management.

Installation

To install all the required packages, run:

pip install langchain langchain-openai langchain-memgraph langchain-experimentalEnvironment setup

Before you get started, make sure you have Memgraph running in the background.

Then, instantiate Memgraph in your Python code. This object holds the

connection to the running Memgraph instance. Make sure to set up all the

environment variables properly.

import os

from langchain_openai import ChatOpenAI

from langchain_experimental.graph_transformers import LLMGraphTransformer

from langchain_memgraph.graphs.graph_document import Document

from langchain_memgraph.chains.graph_qa import MemgraphQAChain

from langchain_memgraph.graphs.memgraph import Memgraph

url = os.environ.get("MEMGRAPH_URI", "bolt://localhost:7687")

username = os.environ.get("MEMGRAPH_USERNAME", "")

password = os.environ.get("MEMGRAPH_PASSWORD", "")

graph = MemgraphGraph(

url=url, username=username, password=password, refresh_schema=False

)The refresh_schema is initially set to False because there is still no data in

the database and we want to avoid unnecessary database calls.

To interact with the LLM, you must configure it. Here is how you can set API key as an environment variable for OpenAI:

os.environ["OPENAI_API_KEY"] = "your-key-here"Graph construction

For the dataset, we’ll use the following text about Charles Darwin:

text = """

Charles Robert Darwin was an English naturalist, geologist, and biologist,

widely known for his contributions to evolutionary biology. His proposition that

all species of life have descended from a common ancestor is now generally

accepted and considered a fundamental scientific concept. In a joint

publication with Alfred Russel Wallace, he introduced his scientific theory that

this branching pattern of evolution resulted from a process he called natural

selection, in which the struggle for existence has a similar effect to the

artificial selection involved in selective breeding. Darwin has been

described as one of the most influential figures in human history and was

honoured by burial in Westminster Abbey.

"""To construct the graph, first initialize LLMGraphTransformer from the desired

LLM and convert the document to the graph structure.

llm = ChatOpenAI(temperature=0, model_name="gpt-4-turbo")

llm_transformer = LLMGraphTransformer(llm=llm)

documents = [Document(page_content=text)]

graph_documents = llm_transformer.convert_to_graph_documents(documents)The graph structure in the GraphDocument format can be forwarded to the

add_graph_documents() procedure to import in into Memgraph:

# Make sure the database is empty

graph.query("STORAGE MODE IN_MEMORY_ANALYTICAL")

graph.query("DROP GRAPH")

graph.query("STORAGE MODE IN_MEMORY_TRANSACTIONAL")

# Create KG

graph.add_graph_documents(graph_documents)The add_graph_documents() procedure transforms the list of graph_documents

into appropriate Cypher queries and executes them in Memgraph.

In the below image, you can see how the text was transformed into a knowledge graph and stored into Memgraph.

For additional options, check the full guide on the LangChain docs.

Querying

In the end, you can query the knowledge graph:

chain = MemgraphQAChain.from_llm(

ChatOpenAI(temperature=0),

graph=graph,

model_name="gpt-4-turbo",

allow_dangerous_requests=True,

)

print(chain.invoke("Who Charles Robert Darwin collaborated with?")["result"])Here is the result:

MATCH (:Person {id: "Charles Robert Darwin"})-[:COLLABORATION]->(collaborator)

RETURN collaborator;

Alfred Russel WallaceIn the image below, you can see what’s happening under the hood to get the answer.

Cognee

Cognee is an AI-powered toolkit for cognitive search and graph-based knowledge representation. It leverages LLMs to extract structured concepts and relationships from natural language, storing them in Memgraph as a knowledge graph. This enables semantic search and graph querying over unstructured text. You can follow the Jupyter Notebook example or go through quick start below.

Installation

Before you begin, ensure the following are installed:

- Docker: Install Docker

- Memgraph: Run Memgraph using the recommended script:

# macOS/Linux

curl https://install.memgraph.com | sh

# Windows

iwr https://windows.memgraph.com | iexThis launches Memgraph on localhost:3000.

- Poetry and dependencies:

poetry init

poetry add cognee

pip install neo4jEnvironment setup

Create a .env file in your project root and define your configuration:

LLM_API_KEY=sk-...

LLM_MODEL=openai/gpt-4o-mini

LLM_PROVIDER=openai

EMBEDDING_PROVIDER=openai

EMBEDDING_MODEL=openai/text-embedding-3-large

GRAPH_DATABASE_PROVIDER=memgraph

GRAPH_DATABASE_URL=bolt://localhost:7687

GRAPH_DATABASE_USERNAME=" "

GRAPH_DATABASE_PASSWORD=" "In your script or notebook, load the environment:

from dotenv import load_dotenv

load_dotenv()Using Cognee

Once the environment is set up, you can start processing text with Cognee:

import cognee

import asyncio

async def run():

text = "Natural language processing (NLP) is a subfield of computer science focused on the interaction between computers and human language."

# Add text to Cognee

await cognee.add(text)

# Generate the knowledge graph with LLMs

await cognee.cognify()

# Semantic search

results = await cognee.search(query_text="What is NLP?")

print("Search Results:\n")

for result in results:

print(result)

await run()What happens under the hood?

cognee.add(): Stores unstructured input text.cognee.cognify(): Converts text to a structured graph using LLMs and stores it in Memgraph.cognee.search(): Runs semantic search queries using vector embeddings and graph context.

Mem0

Mem0 is a framework for managing memory in AI systems, with support for graph-based storage and retrieval. Memgraph can be used as a backend for Mem0’s Graph Memory, enabling scalable, queryable memory storage for agentic workflows and intelligent applications.

Installation

Before you start, ensure you have the following are installed:

- Docker: Install Docker

- Install Mem0 with Graph Memory support Use pip to install Mem0 with support for graph memory:

pip install "mem0ai[graph]"- Run Memgraph Start Memgraph using Docker with schema support enabled:

docker run -p 7687:7687 memgraph/memgraph-mage:latest --schema-info-enabled=TrueThe --schema-info-enabled flag improves schema extraction and indexing

performance.

Configuration

First, import the required modules and configure the OpenAI API:

from mem0 import Memory

import os

os.environ["OPENAI_API_KEY"] = "<your-openai-api-key>"Define the configuration to use OpenAI for embedding and Memgraph for graph memory storage:

config = {

"embedder": {

"provider": "openai",

"config": {

"model": "text-embedding-3-large",

"embedding_dims": 1536,

},

},

"graph_store": {

"provider": "memgraph",

"config": {

"url": "bolt://localhost:7687",

"username": "memgraph",

"password": "mem0graph",

},

},

}Graph Memory initialization

Initialize the Mem0 memory store using the configuration:

m = Memory.from_config(config_dict=config)

Mem0 will connect to Memgraph and prepare it for memory storage.Store Memories

You can store structured conversations or memory entries like this:

messages = [

{"role": "user", "content": "I'm planning to watch a movie tonight. Any recommendations?"},

{"role": "assistant", "content": "How about a thriller movie? They can be quite engaging."},

{"role": "user", "content": "I'm not a big fan of thriller movies but I love sci-fi movies."},

{"role": "assistant", "content": "Got it! I'll avoid thriller recommendations and suggest sci-fi movies in the future."},

]

result = m.add(messages, user_id="alice", metadata={"category": "movie_recommendations"})The messages are stored in Memgraph with the associated user ID and metadata.

Search Memories

You can query stored memories using semantic search:

for result in m.search("what does alice love?", user_id="alice")["results"]:

print(result["memory"], result["score"])Example output:

Loves sci-fi movies 0.315

Planning to watch a movie tonight 0.096

Not a big fan of thriller movies 0.094If you’d like a more in-depth look at how Mem0 works with Memgraph, check out the official Mem0 docs.

LightRAG

LightRAG is a simple and fast retrieval-augmented generation framework that combines the power of graph databases with large language models. It provides efficient graph-based knowledge representation and retrieval capabilities, making it ideal for building RAG applications that require sophisticated understanding of entity relationships and context.

LightRAG supports Memgraph as a graph storage backend, offering high-performance in-memory graph operations with full compatibility with the Neo4j Bolt protocol.

Installation

To use LightRAG with Memgraph, you need to install LightRAG from source since Memgraph support is not yet available in the PyPI release:

# Clone the LightRAG repository

git clone https://github.com/HKUDS/LightRAG.git

cd LightRAG

# Install from source

pip install -e .Environment setup

Before getting started, ensure you have Memgraph running. You can quickly start Memgraph using Docker:

docker run -p 7687:7687 memgraph/memgraph:latestConfigure your environment variables for Memgraph connection:

export MEMGRAPH_URI="bolt://localhost:7687"

export MEMGRAPH_USERNAME="" # Default is empty

export MEMGRAPH_PASSWORD="" # Default is empty

export MEMGRAPH_DATABASE="memgraph" # Default database nameBasic usage

Here’s a simple example of using LightRAG with Memgraph:

Export your OPENAI_API_KEY environment variable before running the script.

import os

import asyncio

from lightrag import LightRAG, QueryParam

from lightrag.llm.openai import gpt_4o_mini_complete, gpt_4o_complete, openai_embed

from lightrag.kg.shared_storage import initialize_pipeline_status

from lightrag.utils import setup_logger

setup_logger("lightrag", level="INFO")

WORKING_DIR = "./rag_storage"

if not os.path.exists(WORKING_DIR):

os.mkdir(WORKING_DIR)

async def initialize_rag():

rag = LightRAG(

working_dir=WORKING_DIR,

embedding_func=openai_embed,

llm_model_func=gpt_4o_mini_complete,

graph_storage="MemgraphStorage", #<-----------override KG default

)

# IMPORTANT: Both initialization calls are required!

await rag.initialize_storages() # Initialize storage backends

await initialize_pipeline_status() # Initialize processing pipeline

return rag

async def main():

try:

# Initialize RAG instance

rag = await initialize_rag()

rag.insert("Your text")

# Perform hybrid search

mode = "hybrid"

print(

await rag.query(

"What are the top themes in this story?",

param=QueryParam(mode=mode)

)

)

except Exception as e:

print(f"An error occurred: {e}")

finally:

if rag:

await rag.finalize_storages()

if __name__ == "__main__":

asyncio.run(main())Advanced configuration

LightRAG provides several configuration options for working with Memgraph:

Workspace isolation: Use the MEMGRAPH_WORKSPACE environment variable to

isolate data between different LightRAG instances:

export MEMGRAPH_WORKSPACE="project_a"Custom connection parameters: You can also configure connection parameters

through a config.ini file:

[memgraph]

uri = bolt://localhost:7687

username =

password =

database = memgraphIf you’d like a more detailed guidance, check out LightRAG in the ai-toolkit repository or Jupyter Notebook example demonstrating LightRAG and Memgraph integration.

For more information about LightRAG’s advanced configurations, features, and usage examples with Memgraph, visit the LightRAG GitHub repository.