Building a Movie Similarity Search Engine with Vector Search in Memgraph

In this tutorial, we’ll explain how to use Memgraph vector search capabilities to find movies based on their plots or descriptions. We’ll leverage the Wikipedia Movie Plots dataset (available on Kaggle) and generate vector embeddings using the SentenceTransformer Python library.

For more information about the vector search feature, check out Simplify Data Retrieval with Memgraph’s Vector Search and Memgraph docs (vector search).

Step 1: Launch Memgraph with Vector Search Enabled

To enable vector search in Memgraph, use the --experimental-enabled=vector-search flag and the necessary configuration parameters.

Run the following Docker command to start Memgraph with the vector search feature:

docker run -p 7687:7687 -p 7444:7444 memgraph/memgraph:latest --experimental-enabled=vector-search --experimental-config='{"vector-search": {"movies_index": {"label": "Movie","property": "embedding","dimension": 384,"capacity": 100, "metric": "cos"}}}'Here, we define a vector index named movies_index:

- Label:

Movie - Property:

embedding - Dimension: 384

- Metric: Cosine similarity

Step 2: Load and Preprocess the Dataset

Next, download the dataset and filter it to include only Christopher Nolan’s movies. These will serve as our example dataset.

Load the Dataset

import pandas as pd

import kagglehub

# Download the dataset from Kaggle

dataset_path = kagglehub.dataset_download("jrobischon/wikipedia-movie-plots")

df = pd.read_csv(dataset_path + "/wiki_movie_plots_deduped.csv")Filter for Christopher Nolan Movies

# Select Christopher Nolan movies

nolan_movies = df[df['Director'] == 'Christopher Nolan']

nolan_movies.reset_index(drop=True, inplace=True)Step 3: Compute Vector Embeddings

Use the SentenceTransformer library to generate 384-dimensional vector embeddings for the movie plots.

from sentence_transformers import SentenceTransformer

# Define a function to compute embeddings

def compute_embeddings(texts):

model = SentenceTransformer('paraphrase-MiniLM-L6-v2')

return model.encode(texts)

# Generate embeddings for Nolan movies

embeddings = compute_embeddings(nolan_movies['Plot'].values)Step 4: Import Data into Memgraph

Create nodes for each movie in Memgraph, storing the vector embeddings in the embedding property.

import neo4j

# Connect to Memgraph

driver = neo4j.GraphDatabase.driver("bolt://localhost:7687", auth=("", ""))

# Insert data into Memgraph

with driver.session() as session:

for index, row in nolan_movies.iterrows():

# remove quotes from the title to avoid parsing issues

title = row["Title"].replace('"', '')

embedding = embeddings[index].tolist()

embeddings_str = ",".join([str(x) for x in embedding])

query = f'CREATE (m:Movie {{title: "{title}", year: {row["Release Year"]}, embedding: [{embeddings_str}]}})'

session.run(query)Step 5: Query the Vector Index

Create a Python function to query the movies_index and find the most similar movies based on a given plot description.

def find_movie(plot):

embeddings = compute_embeddings([plot])

embeddings_str = ",".join([str(x) for x in embeddings[0]])

with driver.session() as session:

query = f"CALL vector_search.search('movies_index', 3, [{embeddings_str}]) YIELD node, similarity RETURN node.title, similarity"

result = session.run(query)

for record in result:

print(record)Step 6: Try It Out

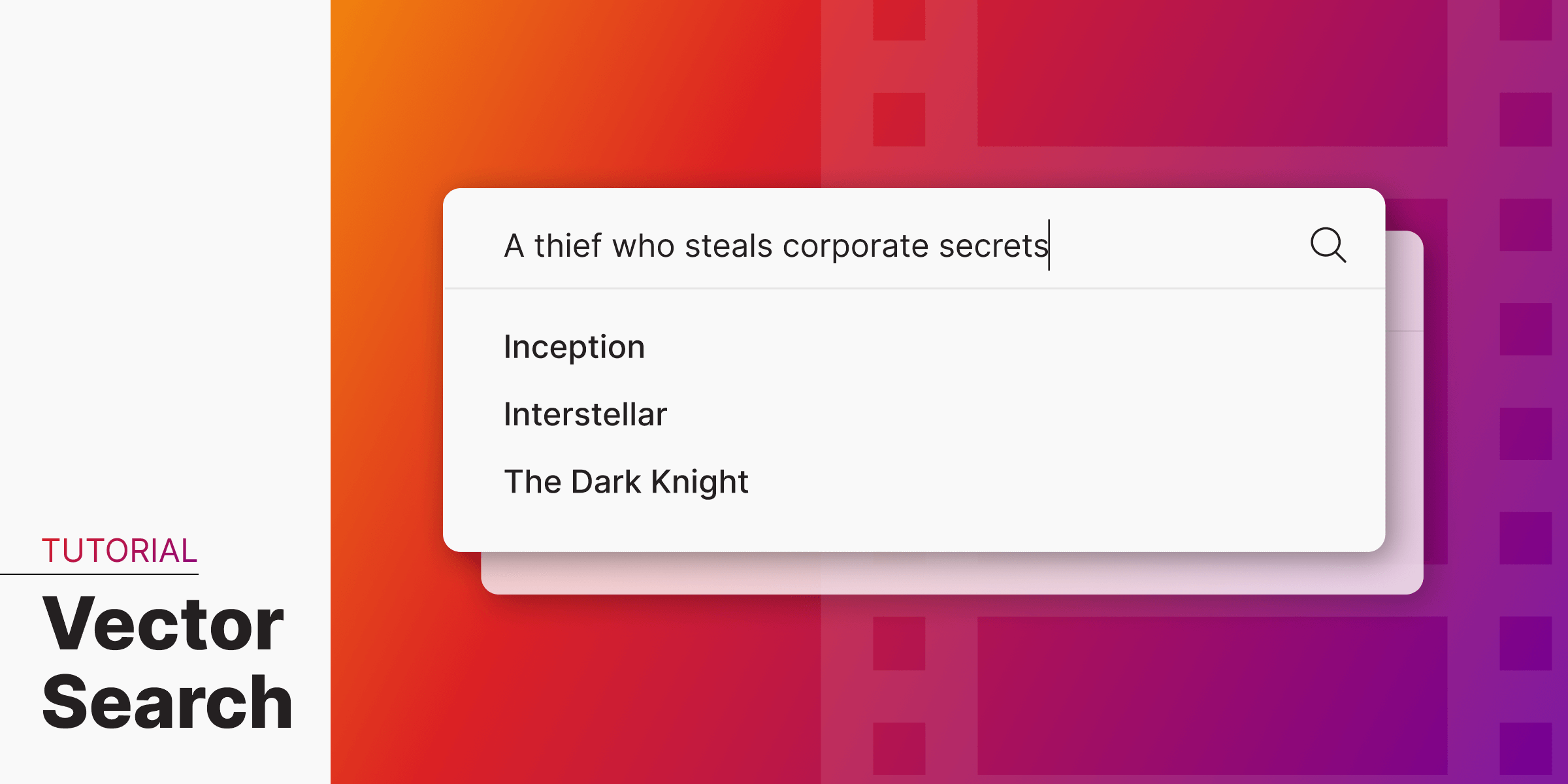

Example 1: Finding Inception

plot = "A thief who steals corporate secrets through the use of dream-sharing technology is given the inverse task of planting an idea into the mind of a C.E.O."

find_movie(plot)Expected Results:

<Record node.title='Inception' similarity=0.5250678062438965>

<Record node.title='Interstellar' similarity=0.2907602787017822>

<Record node.title='The Dark Knight' similarity=0.2784501910209656>Example 2: Finding Memento

plot = "An insurance investigator suffers from anterograde amnesia, leaving him unable to form new memories, and uses notes and tattoos to track down his wife's killer."

find_movie(plot)Expected Results:

<Record node.title='Memento' similarity=0.37598633766174316>

<Record node.title='Insomnia' similarity=0.26347029209136963>

<Record node.title='Inception' similarity=0.23714923858642578>Step 7: Expand the Dataset

Add more movies to the dataset to further experiment and observe how the search results evolve. You can also explore different similarity metrics, such as Jaccard, Hamming, or Pearson, to fine-tune the results for your use case.

Conclusion

By combining vector search and graph databases, Memgraph makes it easy to perform semantic searches with relational context. This example shows how powerful and flexible vector search can be for tasks like movie recommendation or content discovery.

If you’re are looking to build GraphRAG with Memgraph, check out other genAI-related features on Memgraph docs (GraphRAG with Memgraph).