Memgraph 3.0 Is Out: Solve the LLM Context Problem

Status and Journey

Memgraph 2.0 was launched in 2021 and was a huge success—hundreds of thousands of downloads, thousands of community members, and dozens and dozens of customers needing speed and are excited to benefit from the most performant graph database on the market.

Since then, we’ve been on a relentless journey to refine Memgraph. Between 2.0 and 3.0, we focused on three key areas:

- Ease of use. Making Memgraph more intuitive with better tooling, documentation, and integrations.

- Enterprise-focused features. Bringing robust access control, advanced security, and high-availability options.

- Performance optimization. Pushing the limits of query speed, memory efficiency, and large-scale graph handling.

Now, Memgraph 3.0 is here to take things further. While graph analytics remains a core use case, we’ve also evolved to meet new demands in AI, real-time processing, and intelligent decision-making. Whether you're handling 1000+ reads and writes per second or integrating with AI-driven systems, Memgraph continues to deliver unmatched speed and flexibility.

AI Age: LLM Limitations and Context Problem

However, the age of AI is here. AI and LLMs are taking the world by storm, and we are at the beginnings of enormous economic enablement across industries powered by Chatbots and Agents. For apps to be useful, they need to be personalized and tailored to the user, with the right context. Otherwise, they are general.

Unfortunately, LLMs have clear limitations, most notably the context window. Enterprise data and knowledge bases that users want to query are many orders of magnitude larger than the size of the context window. They cannot do that as they cannot process vast datasets without losing precision or hallucinating.

So, if AI is going to live up to its potential for the Enterprise, it needs better context. That's the problem that Memgraph 3.0 solves. Memgraph brings the most relevant information from your Enterprise datasets to the context window.

GraphRAG As a Key Building Block

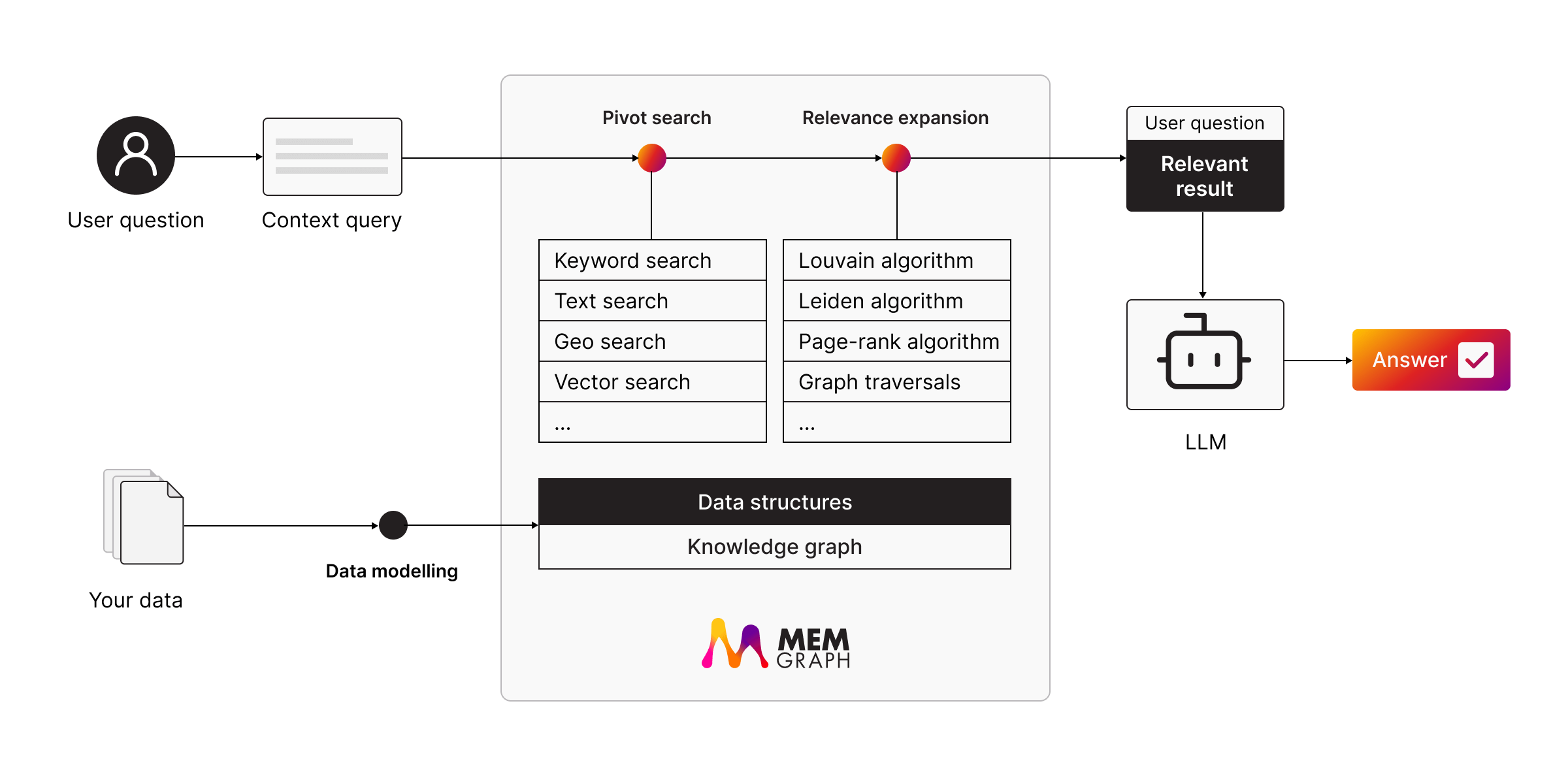

By being the context engine, Memgraph makes personalized GenAI apps possible. It does it through GraphRAG.

In a nutshell, GraphRAG uses the power of knowledge graphs to improve the recall and precision of retrieval-augmented generation (RAG) systems. Instead of just scraping the surface, GraphRAG dives deep into your knowledge graph to extract accurate, contextually relevant insights, minimize hallucinations, and deliver answers grounded in your proprietary data. But sometimes, you want to be able to skim through and search based on similarity across vast swathes of unstructured data.

In such use cases, that’s when vectors come in. With vector search, you can now combine graph-based graph-based reasoning with dense vector representations of unstructured data—like documents, embeddings, and LLM-generated knowledge. Graphs and vectors are a perfect match: graphs provide explicit relationships, while vectors encode semantic similarity. Together, they create a powerful retrieval layer, enabling multi-hop reasoning, fast similarity search, and dynamic context refinement.

With Memgraph’s in-memory graph database as the context engine at its core, GraphRAG integrates knowledge graphs, advanced dynamic algorithms, and user intent from LLMs to enable developers to create chatbots and agents with the most relevant information fed into the context window.

So, What’s New in 3.0?

Vector search is now a core feature: Memgraph 3.0 introduces vector search, enabling similarity and relevance-based graph search in a unified system. This feature is perfect for pinpointing the most relevant nodes in your knowledge graph.

GraphChat gets smarter in Memgraph Lab 3.0: We’ve done major updates to GraphChat in Memgraph Lab (with dark mode now). You don’t need to be a Cypher expert to unlock the full potential of your data. Just ask a question in plain English, and GraphChat will convert it into a Cypher query, run it, and give you the best possible answer—grounded in the context of your knowledge graph. No more guessing, no more approximations, just answers that make sense. With the rise of DeepSeek, Memgraph Lab now supports adding DeepSeek models and connections to GraphChat.

Performance & reliability improvements: The latest update also significantly improves performance and reliability. Replication recovery has been optimized for more efficient failover handling, ensuring greater system resilience. Query execution is now faster, with improved abort times and better performance under load. Additionally, Memgraph 3.0 includes security updates, such as updated Python libraries in the Docker package, to enhance overall system safety.

For more details, head over to the Release notes and Memgraph docs to check out features supporting GraphRAG with Memgraph.

NASA and Cedars-Sinai Are Already Using Memgraph!

There's a long list of companies and developers that are using Memgraph for their graph analytics use cases, but there's also a growing list of companies that are using Memgraph for their GenAI use cases.

Most notably, NASA and Cedars-Sinai. NASA is using Memgraph in their HR Q&A system for employees.

David Meza, Head of Analytics Human Capital at NASA:

At NASA, we are integrating Memgraph in our Human Capital Intelligent Query System to efficiently manage our human capital knowledge graph, enabling faster retrieval of relevant information for employees. Its graph-based approach allows us to keep track of real-time updates, ensuring accurate connections between various policy documents and data sources. By incorporating Memgraph into our RAG process, we enhance our system’s responsiveness and better address NASA’s knowledge extraction without requiring extensive manual data coordination.

On the other side, Cedars-Sinai built a GraphRAG-powered system to query their Alzheimer’s Disease Knowledge Base (AlzKB). Additionally, in the healthcare area, Precina Health uses Memgraph for real-time patient data retrieval, enabling AI-powered personalized treatment plans for diabetes management.

Finally, early in the year, as one of the early adopters, Microchip used Memgraph for their LLM-chatbot that delivers fast, context-aware support.

Resources

- Download Memgraph: Get Memgraph 3.0 and start building your apps!

- Docs and release notes: Everything you need to know. What’s new, what changed, and how to use it.

- AI demos: Real-world GraphRAG, AI, and graph-powered workflows. See it in action.

- Events: If you care about GraphRAG, Memgraph Lab 3.0, and AI-powered search join us for:

- February 13th – Memgraph Agentic GraphRAG

- February 18th – GraphRAG Global Search With Hierarchical Modeling

- February 20th – Memgraph Lab 3.0 Demo

- February 27th – Memgraph + DeepSeek for Retrieval-Augmented Generation (RAG)