Building GenAI Applications with Memgraph: Easy Integration with GPT and Llama

Many users are trying to incorporate large language models (LLMs) into their apps. With graph technology being both popular and useful for these use cases, the GenAI stack is essential.

Building Retrieval-Augmented Generation (RAG) Systems

To make it easier for developers to use various LLMs with Memgraph, we’ve integrated Memgraph with LangChain JS and LangChain Python—frameworks for developing applications powered by LLMs. LangChain simplifies building a RAG system, which retrieves data from Memgraph and generates natural language responses. Check out Bret Brewer’s presentation to see it in action.

With the RAG system, you can build a GenAI application, such as a chat model that connects to Memgraph for knowledge and context retrieval. This model follows the RAG system framework, consisting of retrieval and generation components. It retrieves information from both the LLM knowledge and Memgraph. The chat model uses this information to generate responses. See how Memgraph’s customer Microchip implemented a similar use case.

Using Memgraph for graph storage enhances contextual awareness beyond traditional methods. Graph stores, like Memgraph, are naturally suited for knowledge graphs and handle complex relationships more efficiently. This efficiency benefits Retrieval-Augmented Generation (RAG) models, which require an understanding of these relationships to produce accurate, contextually relevant responses.

Because relationships are integral to the graph data model, graph stores semantically understand the data better, leading to improved retrieval accuracy and generation results. Additionally, Memgraph's advanced analytics with MAGE,, provides algorithms such as shortest path, centrality, and community detection. These algorithms offer further context, enhancing the performance of RAG models.

GraphChat - GenAI-powered Feature in Memgraph Lab

GraphChat, a feature in Memgraph Lab, is a prime example of a RAG system within Memgraph. It allows you to chat with Memgraph about knowledge it contains, bridging the Cypher knowledge gap and making it easy for anyone to query a graph database. GraphChat supports connections to OpenAI, Azure OpenAI, and Ollama LLMs.

For more information about GraphChat, check out Natural Language Querying with Memgraph Lab.

Memgraph GenAI Stack to Get You Started

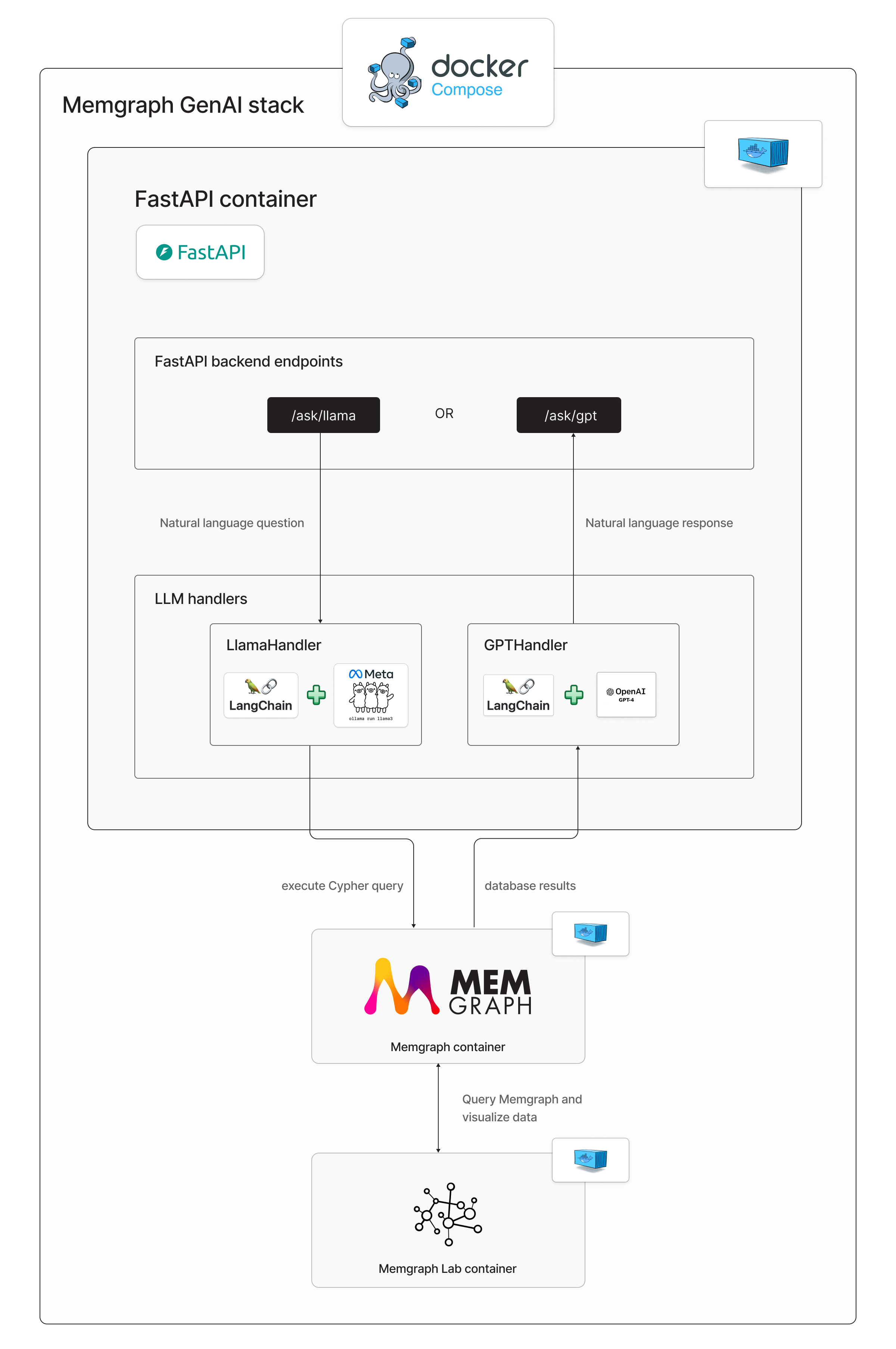

Building GenAI applications can be complex, but Memgraph offers a template to help you get started. This demo application allows you to chat with Memgraph in natural language. The backend is built with FastAPI, and the RAG framework is implemented with LangChain. The application is containerized with Docker Compose and supports GPT-4 and Llama 3 LLMs. It includes Memgraph, Memgraph Lab, and FastAPI backend services.

The demo comes with a preloaded Game of Thrones dataset for schema generation. You can load your dataset by updating the dataset.cypherl file.

The available API endpoints are:

http://localhost:8000/ask/gptfor the GPT-4http://localhost:8000/ask/llamafor the Llama3 model

To chat with Memgraph using GPT-4, run:

curl -X POST "http://localhost:8000/ask/gpt" -H "Content-Type: application/json" -d '{"question": "How many seasons there are?"}'To chat with Llama3, run:

curl -X POST "http://localhost:8000/ask/llama" -H "Content-Type: application/json" -d '{"question": "How many seasons there are?"}'Both commands should return:

{"question":"How many seasons there are?","response":"There are 8 seasons."}%

This application shows how straightforward it can be to implement the RAG framework and build your own GenAI application. Use this template as a starting point and tailor it to your needs.

The demo app is created with Python, since Memgraph really shines in the Python ecosystem. Memgraph Advanced Graph Extensions or MAGE is a set of graph algorithms and utility procedures, some of which are implemented in C++ and others in Python. MAGE is extendible with the custom query modules, meaning that you can stick with your familiar Python environment and build new procedures to extend the capabilities of Cypher query language. Memgraph being at home in the Python ecosystem can be useful for code generation, function calling, or small local LLM models in the custom query module.

Share Your GenAI App

If you’ve built a GenAI application with Memgraph, we encourage you to share your story with the Memgraph community. We're always open to external contributions and eager to hear about new developments in the LLM world and how graphs power exciting use cases.

Useful Links

- Webinar recording: Memgraph Community Call - Querying Memgraph through an LLM

- Blog post: How Microchip Uses Memgraph’s Knowledge Graphs to Optimize LLM Chatbots

- Webinar recording: Microchip Optimizes LLM Chatbot with RAG and a Knowledge Graph

- Get-started template: Memgraph GenAI Stack

- Memgraph Discord community