Inside the Memgraph MCP Client: Interoperable Graph Context in Action

What does it take to make LLMs work with real enterprise systems in a clean, predictable way? Most teams still rely on custom integrations that break as soon as they scale. Model Context Protocol (MCP) offers a different path. It gives LLMs a standard way to discover capabilities, call tools, and work across many systems without custom wiring.

Memgraph's recent community call by Toni Lastre walked through why that matters, how the all new Memgraph MCP Client fits into the picture, and how multi-server workflows actually run in Memgraph Lab. For a full walkthrough with the live demo, you can catch the full recording here.

Below are some of the highlights from the talk to give you a sense of what was covered.

Key Takeaway 1: Why MCP Was Created

Anyone who has tried to connect LLMs to external enterprise systems knows the pain. Every service has its own API endpoint. Every integration needs custom logic. Add more systems and everything breaks.

That pattern always ends the same way: too much fragmentation leads to a standard.

MCP is that standard for AI tools. It basically acts a USB-C for AI tools.

It defines how AI apps, clients, and servers should talk to each other. And it finally gives LLMs a consistent way to discover capabilities, call tools, and exchange context without one-off adapters.

Key Takeaway 2: What MCP Actually Covers

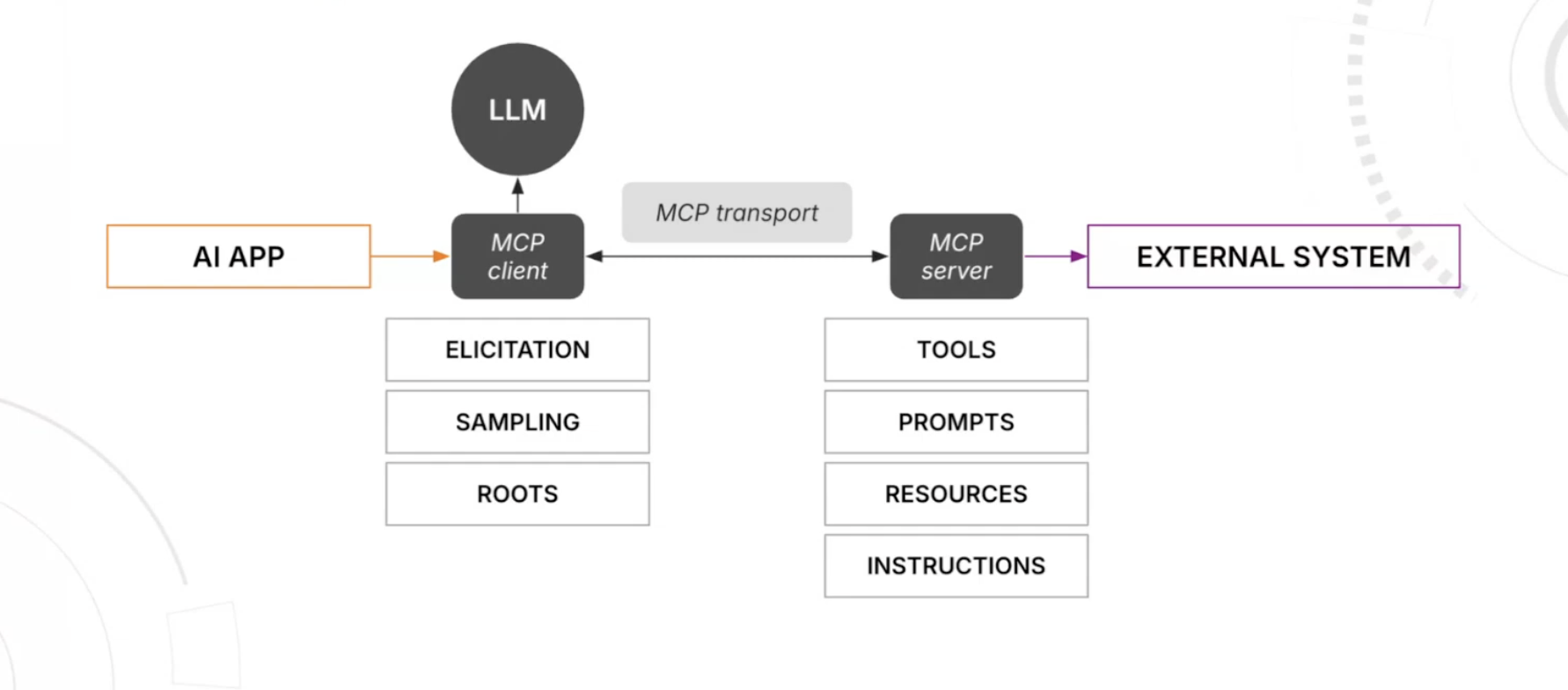

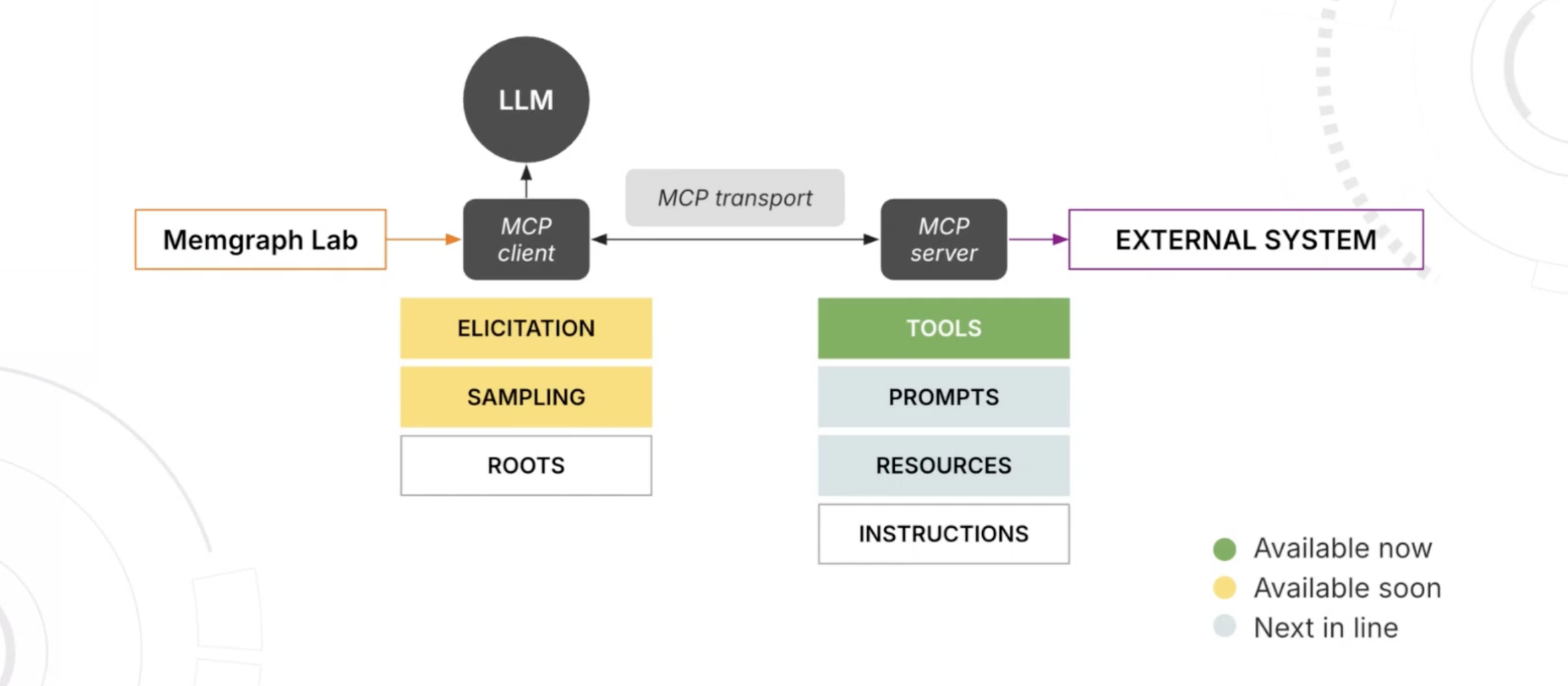

Model Context Protocol (MCP) is an open-source standard by Anthropic. It has two core components:

- MCP Client: initiates connection to servers and communicates LLM ↔ server requests.

- MCP Server: a program that exposes specific capabilities (tools, prompts, resources, etc.) to AI applications. There are registries like “Awesome MCP Servers” that catalogue these servers.

It is not only used for "tool calling". It also defines:

- tools for all executable actions (run Cypher, run PageRank, fetch data).

- prompts for predefined LLM instruction templates.

- resources for read-only data sources (files, docs, schemas).

- instructions that help the client understand how to use tools.

- elicitation when a server needs more details from a user.

- sampling when the server wants to call an LLM to enable an agentic workflow.

- roots defines which directories server should focus on.

Each feature improves how the AI app reasons, retrieves context, or delegates work.

The point is not to overload the LLM with everything. The point is to give it a clean, structured catalog of what is possible.

Key Takeaway 3: What Makes an MCP Client Valuable

There are dozens of MCP clients out there. Sp, how to know which ones are actually useful for your business use cases? There are four evaluation dimensions:

- App features: UI/UX matters fir added value beyond pure tool execution.

- LLM integration: number of models supported and does it support its advanced features.

- MCP feature support: which features the client handles fully (elicitation, sampling, etc.)

- Transport support: which transport type is supported; local vs remote, stdin vs streamable HTTP.

Key Takeaway 4: Memgraph Lab Now Works as an MCP Client

Now that Memgraph Lab had added MCP support, things started getting interesting.

You can:

- connect to multiple MCP servers at once

- discover all the tools they expose

- route tasks between them

- combine graph queries, search tools, visualisation tools, and custom APIs

Lab becomes the place where your agent runs. It handles the sessions, tool invocation, and the messy coordination logic. You only decide which tools the agent should see.

For anyone working on agentic workflows, this is one of the most valuable updates in Lab.

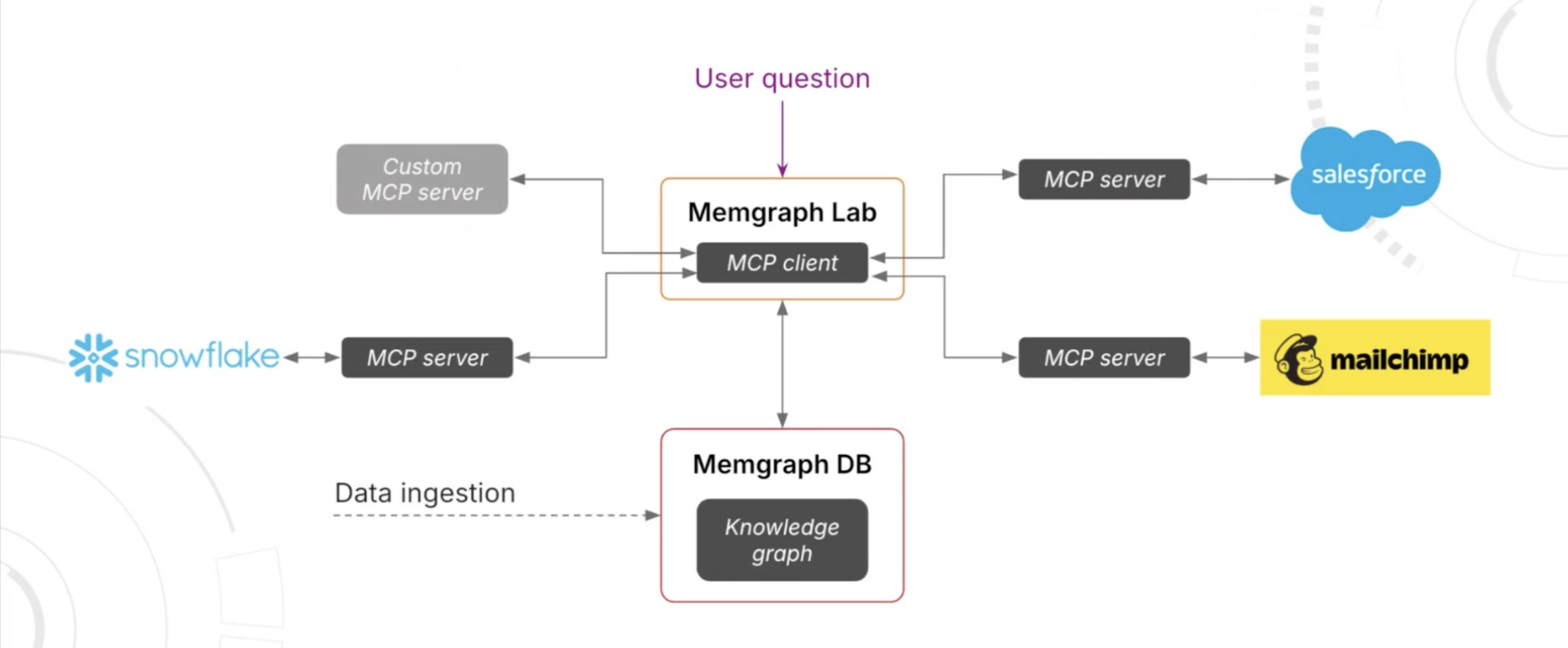

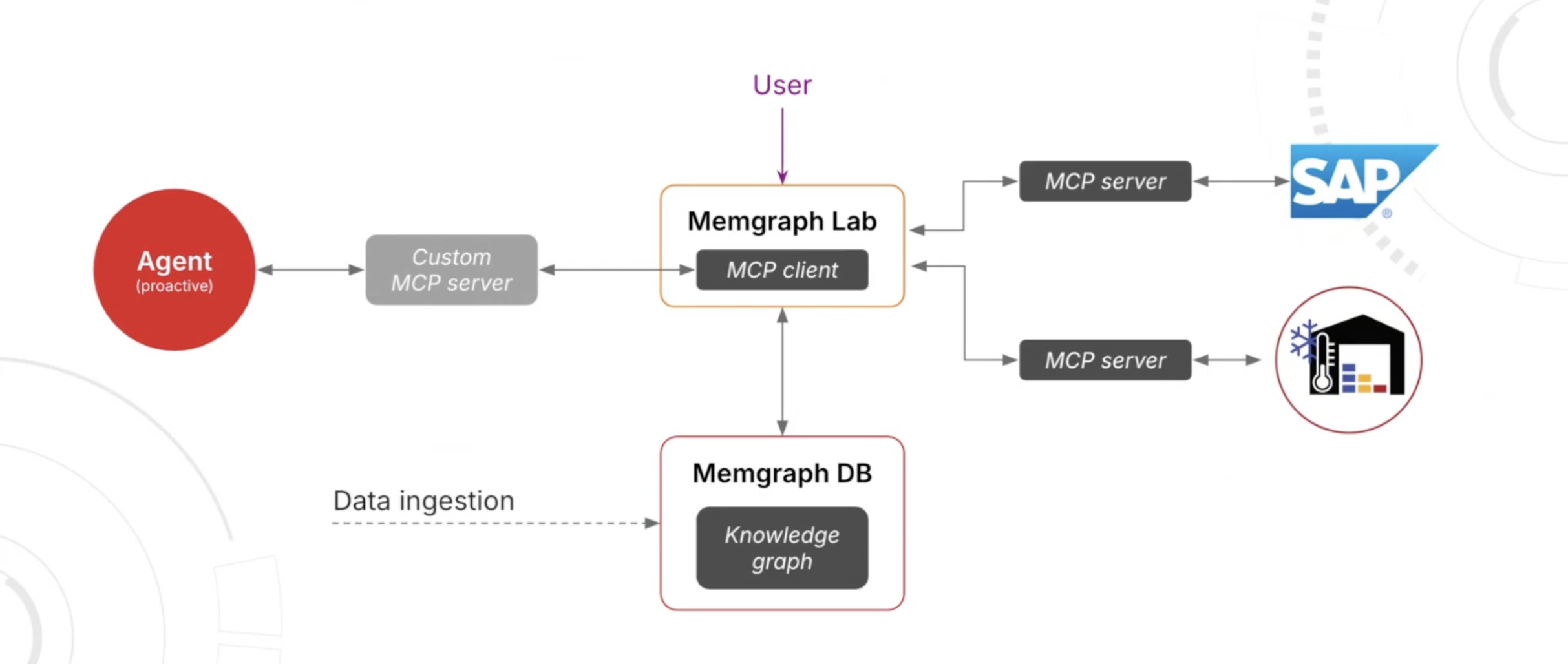

Key Takeaway 5: How Multi Server Workflows Actually Work

A few examples were showcased to describe how you can actually use Lab’s MCP Client to automate multi-server workflows:

Customer 360: An agent can pull account status from Snowflake, fetch sales activity from Salesforce, look up communities in Memgraph, and then prepare a Mailchimp campaign. Everything happens in one place without writing glue code.

Supply chain: An agent can use elicitation to request missing details, use sampling to generate options, and coordinate tasks across SAP, sensors, and a custom agent.

Both examples show the same pattern. Once all systems expose structured capabilities through MCP, an LLM can coordinate across them without hacks.

Key Takeaway 6: Live Demo Walkthrough

The session wrapped with a hands-on demo showing how Memgraph Lab becomes an interoperable workspace once MCP servers are connected.

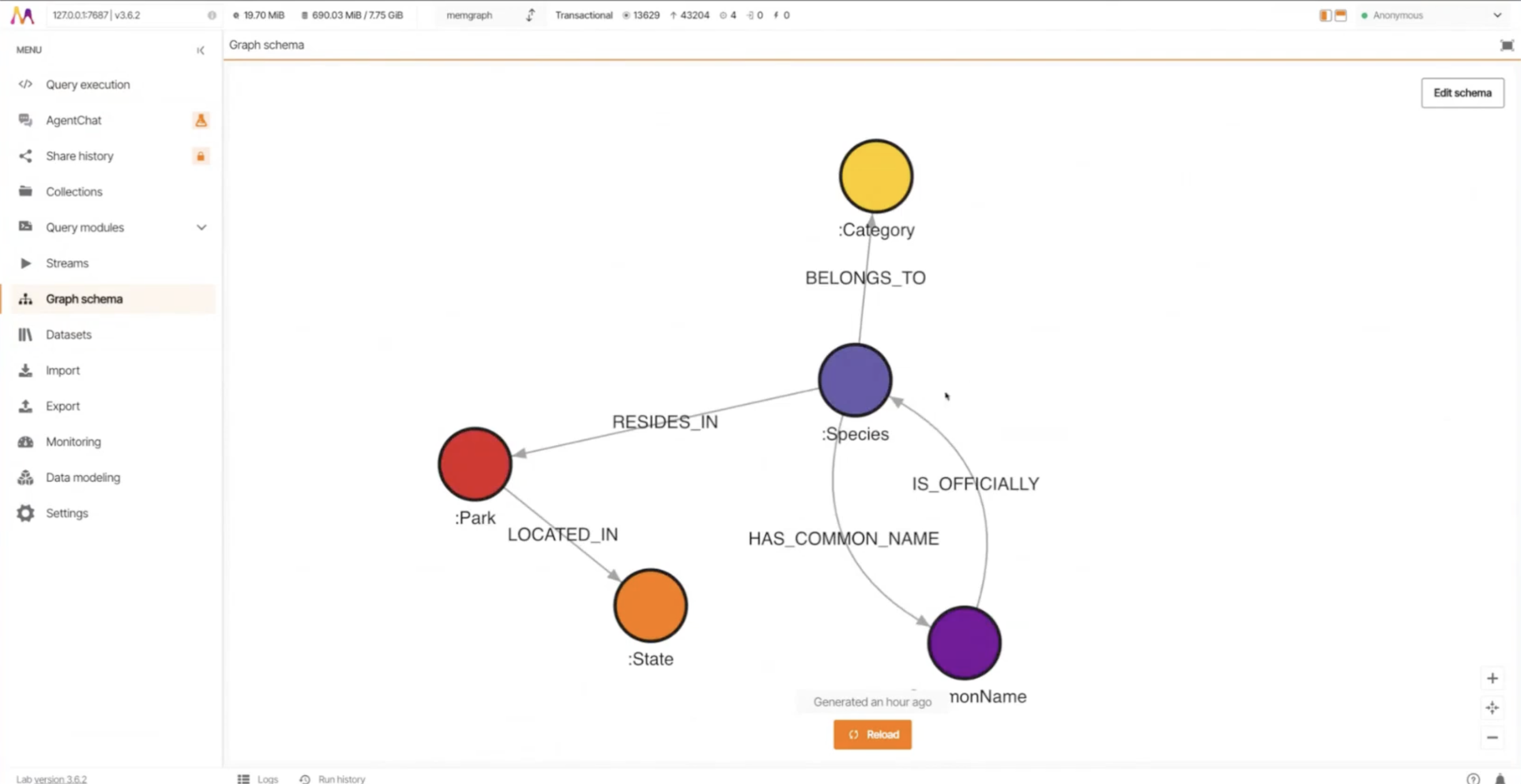

It starts with a simple setup. Memgraph is running locally with a U.S. National Parks dataset loaded. Here’s what its schema looks like:

Inside Lab, the follow setup is done:

- OpenAI provider configured

- three MCP servers connected: Memgraph server graph querying and analysis, Charting server for visualizations, Tavily server for web search and crawling.

- an agent created with a curated set of tools

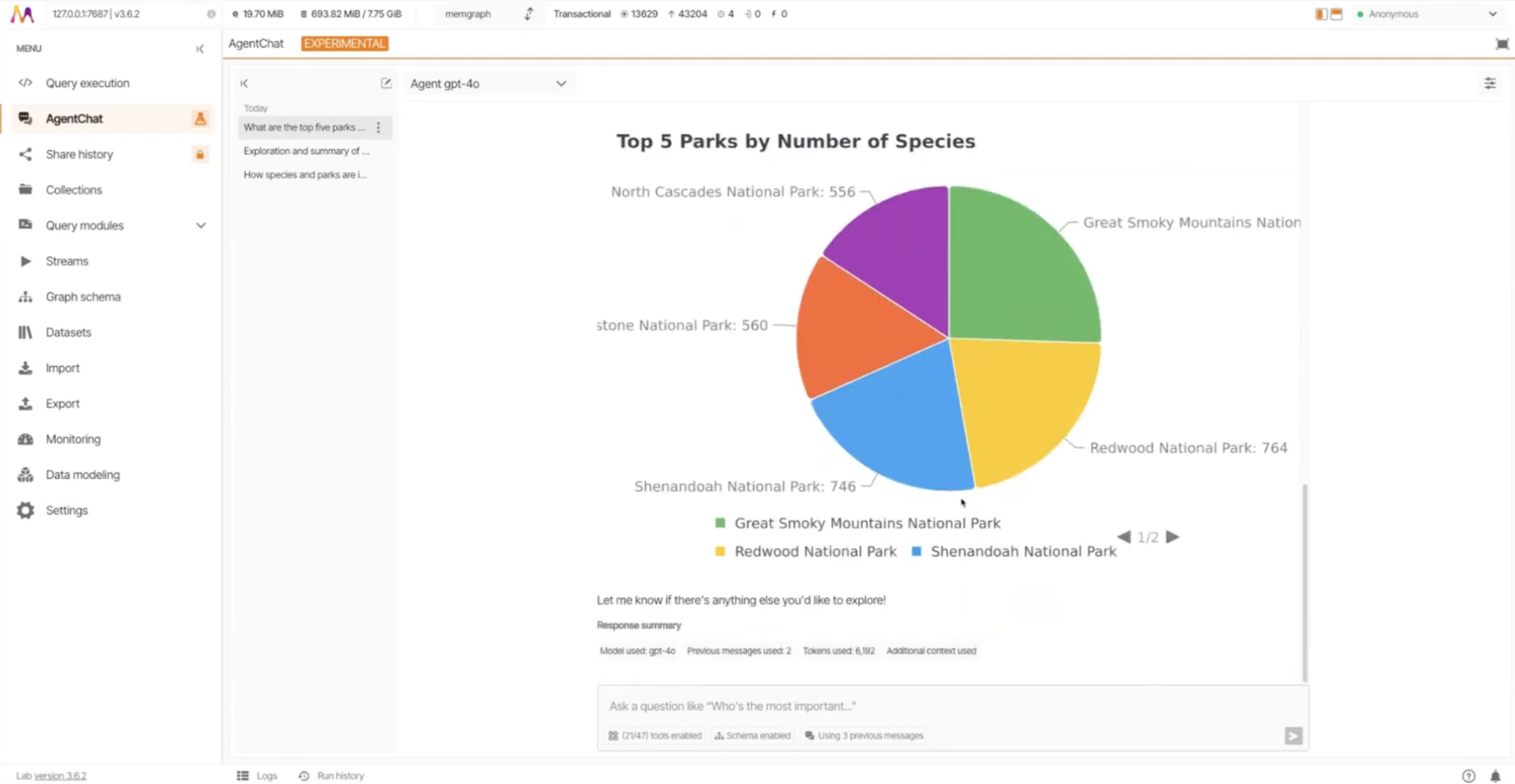

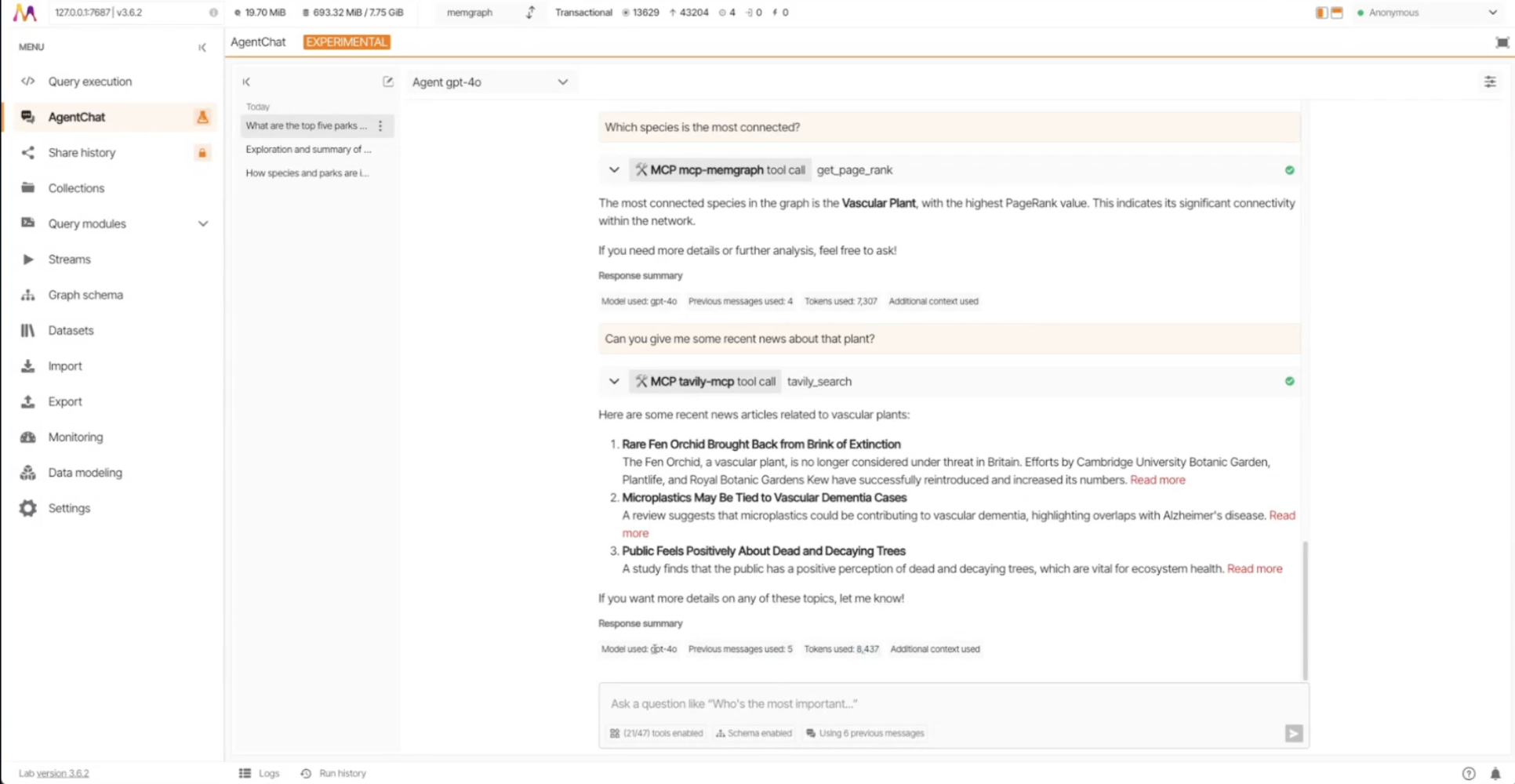

From here, the demo becomes fully interactive. The agent is asked questions about the dataset. It queries Memgraph through MCP, then generates bar and pie charts using the charting server.

When asked for recent news, it switches to the Tavily server to fetch fresh results from the web.

The final request brings everything together. The agent analyses the dataset, retrieves the relevant graph context, and produces a few charts in one continuous flow. Each step uses a different MCP server, and Lab orchestrates the entire sequence without any custom wiring.

The demo clearly shows how Memgraph Lab acts as a single place where LLMs can reason over graph data, call tools across multiple MCP servers, and produce useful outputs in real time.

No custom integration logic. No repeated wiring. Lab orchestrates everything.

If you want to see this part in action, check out the full recording.

Key Takeaway 6: MCP Client Development Challenges

Here are some critical challenges faced behind the scenes:

- MCP evolves very fast and documentation sometimes lags

- LLMs get overwhelmed and confused when exposed to too many tools

- error handling gets tricky as different servers can fail in different ways

- transports behave differently and need uniform handling

Overall, a single system must coordinate multiple moving parts (User, Al app, LLM, and MCP servers). This is the side users do not see but it shapes the design choices in Lab.

Key Takeaway 7: What Is Coming Next in Memgraph Lab

Here are some Memgraph Lab roadmap highlights:

- full support for elicitation, sampling, prompts, and resources

- smoother multi-server connectivity

- Enhanced agentic workflows integrating:

- graph schema creation

- GraphRAG

- query tuning

- structured/unstructured imports

- a Team Hub that centralizes LLM configurations, MCP connections, and agent configurations across teams.

If you are building agentic systems, this is the direction you want your tools to go.

Wrapping Up

This Community Call gave a clear view of why MCP exists, how it works, and how Memgraph Lab’s MCP Client provides an interoperable graph context. To see the full demo walkthrough showcasing everything in motion, watch the full community call, Interoperable Graph Context: Memgraph MCP Client.