4 Real-World Success Stories Where GraphRAG Beats Standard RAG

LLMs are powerful, but they have a glaring weakness: they often lack real-time, domain-specific context. For enterprises, that’s a dealbreaker.

You’ve likely seen LLMs hallucinate, forget previous prompts, or deliver generic answers. That’s because standard methods like cramming data into a limited context window or relying on expensive, slow-to-update fine-tuning simply don’t scale for real-world use cases. Fine-tuning on sensitive data also introduces serious security risks.

This is where GraphRAG comes in.

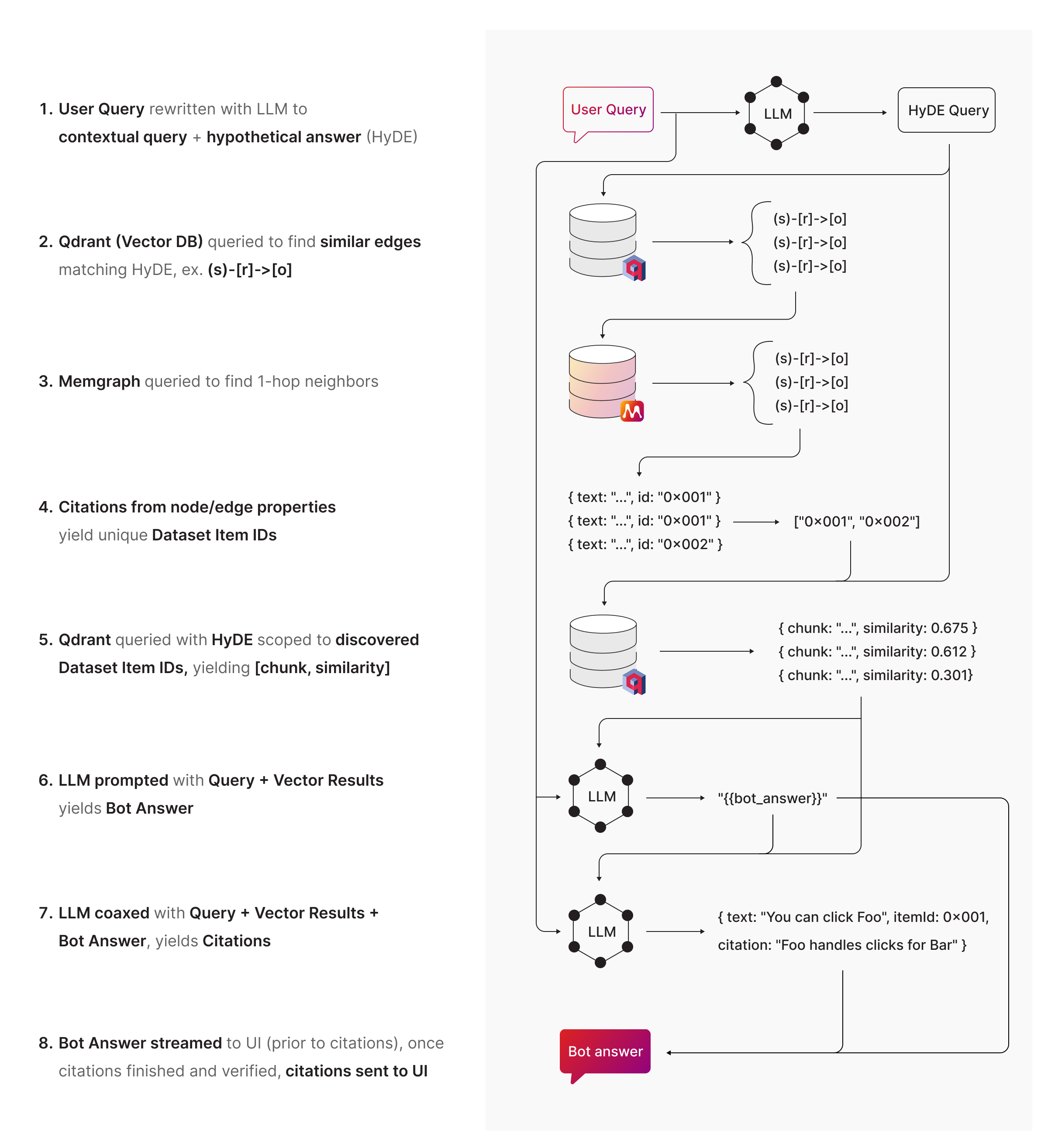

Instead of force-feeding everything into the LLM upfront, GraphRAG connects the model to a real-time knowledge graph, a structured, constantly updated source that can be queried as needed.

With GraphRAG, LLMs can:

- Access real-time information. No more outdated data.

- Reduce hallucinations. Accurate context leads to accurate responses.

- Answer complex queries. Graphs help models reason across connected data.

- Keep sensitive data secure. The model queries the graph, and the data stays private.

Here are four real-world examples of how organizations are using GraphRAG to leverage the power of LLMs.

1. NASA: A People Knowledge Graph for Workforce Intelligence

NASA had a people problem. Not a personnel issue, but a data one. With thousands of employees, overlapping projects, and deep institutional knowledge buried across PDFs, documents, and databases, finding out who knew what was nearly impossible.

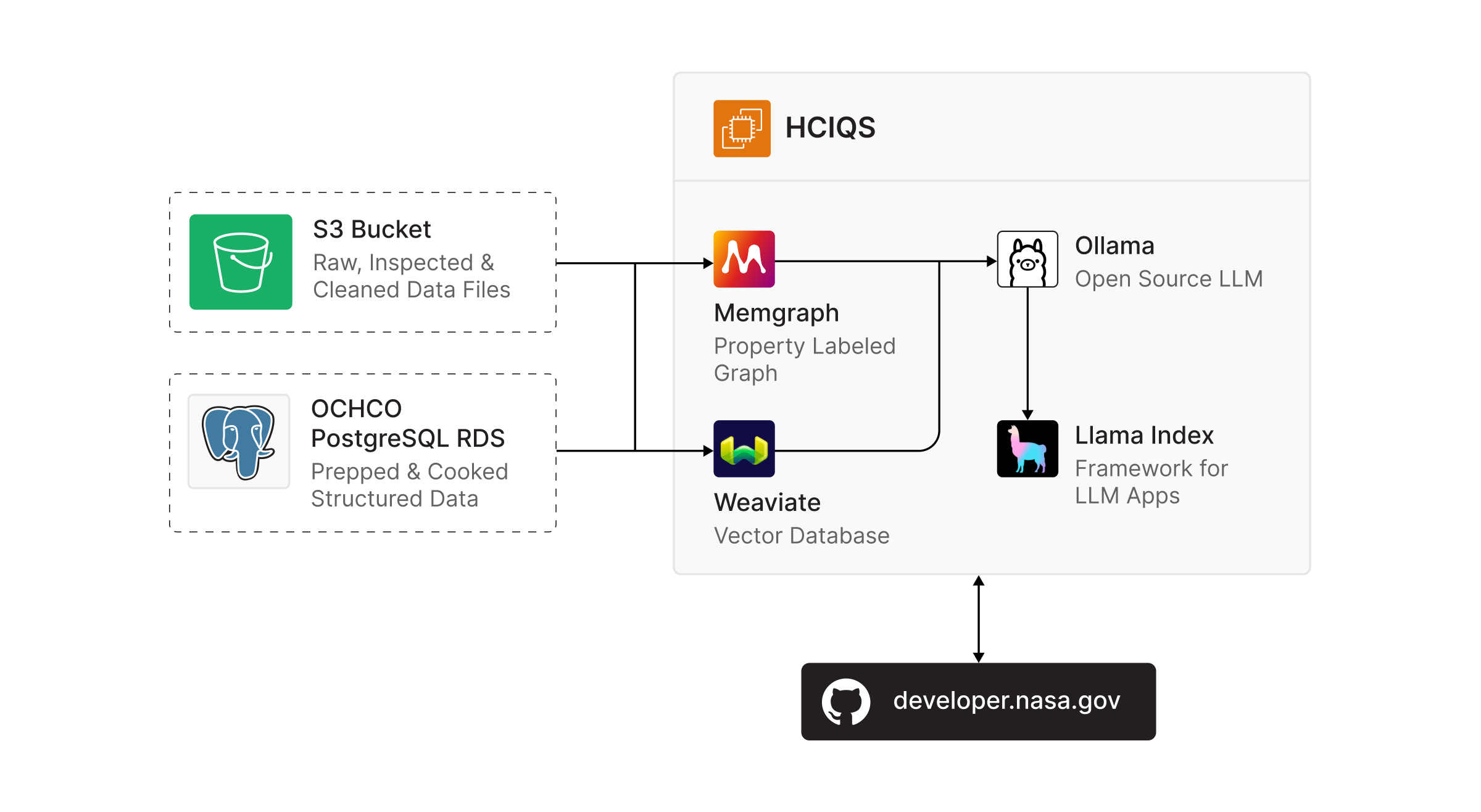

To address this, NASA's People Analytics team built a People Graph using Memgraph, capturing structured relationships between people, projects, departments, and areas of expertise.

Using this as a foundation, they began developing GraphRAG pipelines powered by large language models. These pipelines extract and enrich personell-related data from unstructured sources such as resumes, project descriptions, and reports.

The system is designed to make employee knowledge easily accessible. By analyzing the intent, context, and sentiment of natural language queries, it helps answer complex workforce-related questions.

A vector-only RAG system would fall short here. For example, if someone searched “Who worked on autonomous space robotics?” a vector model might match resumes or documents that mention those words, even in unrelated contexts. It might return someone who once referenced “robotics” in a seminar and “space” in a training course.

GraphRAG, by contrast, connects real project history, expertise, and collaboration by leveraging dynamically updated graph relationships and multi-hop reasoning. So, the results reflect verified roles, not loose semantically similar matches.

The results? Faster onboarding, smarter internal mobility, and quicker identification of in-house experts. The organization now navigates its own knowledge with clarity and speed.

Read Full Story | Watch Full Community Call

2. Precina Health: Optimizing Diabetes Management

Managing chronic conditions like Type 2 diabetes is a global health challenge.

Visualizing these complex patient journeys becomes key to tackling this challenge. Precina Health took this challenge head-on.

They leveraged GraphRAG to systematize Type 2 diabetes management, helping patients reduce their Hemoglobin A1C (HbA1C) by 1% per month (compared to typical annual reductions). Their approach factored in not only clinical care but also social determinants and behavioral insights.

They built a GraphRAG system, named P3C (Provider-Patient CoPilot), that connected medical records with social and behavioral data in real time. That meant providers weren’t just seeing A1C levels, they were getting full context on why those levels might spike or drop. Accordingly, it suggests small, patient-specific adjustments using AI, helping care providers make better decisions. Here’s how it works:

With Memgraph, they powered multi-hop reasoning to trace patient outcomes through layers of cause-and-effect. Standard RAG couldn’t compute those relationships. GraphRAG did.

The results? A 1% monthly drop in HbA1C across patients which is 12x faster than standard care.

Read Full Story | Watch Full Community Call

3. Cedars-Sinai: Knowledge-Aware Automated Machine Learning in Alzheimer’s Research

Beyond big data, Alzheimer’s research is focused on meaningful connections across genes, drugs, and clinical trials. Cedars-Sinai built a knowledge graph of over 1.6 million edges using Memgraph to do just that.

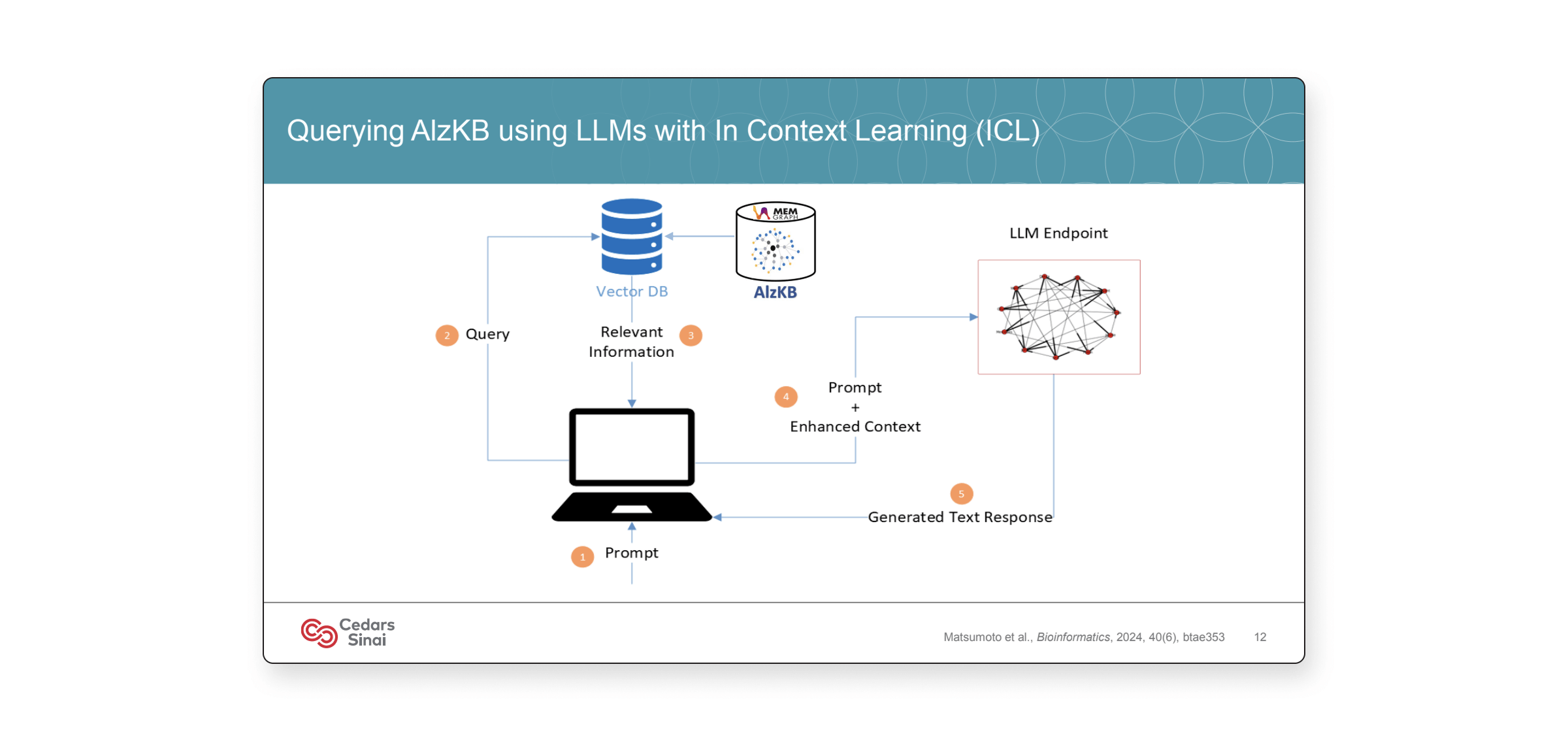

They didn’t just stop there. When standard LLMs failed to understand nuanced medical queries, Cedars-Sinai built Knowledge Retrieval Augmented Generation ENgine (KRAGEN). This GraphRAG system transformed how researchers explored data.

KRAGEN breaks down complex questions, retrieves precise contextual insights from their knowledge graph, and provides answers with high accuracy. Their Alzheimer's Disease Knowledge Base (AlzKB) uses Memgraph as its core, integrating over 20 biomedical sources to provide essential background information to machine learning models.

Their advanced AI agent, ESCARGOT, even beat ChatGPT in multi-hop medical reasoning (94.2% accuracy vs. 49.9%). Truly research-grade reliable!

If Cedars-Sinai used only standard RAG, their system wouldn’t be able to break down a query like “Which drug-treated genes overlap with recent trial results?”, let alone return answers grounded in structured biomedical data. The graph lets their models reason across genes to drugs to trials with

The results? This approach surfaced new treatment possibilities, including Temazepam and Ibuprofen, by guiding the algorithm toward meaningful gene-drug interactions that might have otherwise gone unnoticed.

Read Full Story | Watch Full Community Call

4. Microchip: Empowering Customer Support with LLMs

Microchip Technology faced a critical challenge: their customer service team couldn’t directly access the data needed to answer order status or production-related inquiries. Instead, they had to rely on engineering or operations teams—creating delays, bottlenecks, and internal inefficiencies.

To solve this, they are building a "Workspace Assistant," a GraphRAG-powered chatbot supported by Memgraph and a private custom LLM. Unlike traditional RAG systems, this assistant was able to answer domain-specific queries by retrieving structured insights from real-time operational graphs.

For example, when a customer asked, "Why is my sales order late?" the chatbot could trace relationships across orders, suppliers, and process logs to give a concrete, source-backed explanation.

The solution offered more than just faster answers. It enabled:

- Secure and role-based access to internal data using Memgraph’s enterprise-grade access control.

- Scalable architecture to support multiple business units, each with different data retrieval needs.

- An agent-based architecture with a universal interface that separated user interaction, chain selection, and response generation for better modularity and flexibility.

The results? Microchip’s customer service team gained instant, intuitive access to complex data. Meanwhile, engineering and ops teams were freed from routine query handling, letting them focus on high-priority work.

Read Full Story | Watch Full Community Call

The Verdict: GraphRAG Isn’t Experimental. It’s Essential.

These four examples aren’t just theoretical. They are grounded implementations built to tackle real-world high-stakes problems.

If you’re still wondering whether GraphRAG is worth the effort, maybe ask a better question:

Can your GenAI system afford to ignore context?

Because as these examples show, the future of AI isn’t just generating answers.

It’s generating the right ones using graphs.